Standard RAG is like reading a book one sentence at a time, out of order. We need something new.

When you read a book, you do not jump randomly between paragraphs, hoping to piece together the story. Yet that is exactly what traditional Retrieval-Augmented Generation (RAG) systems do with your data. This approach is fundamentally broken if you care about real understanding.

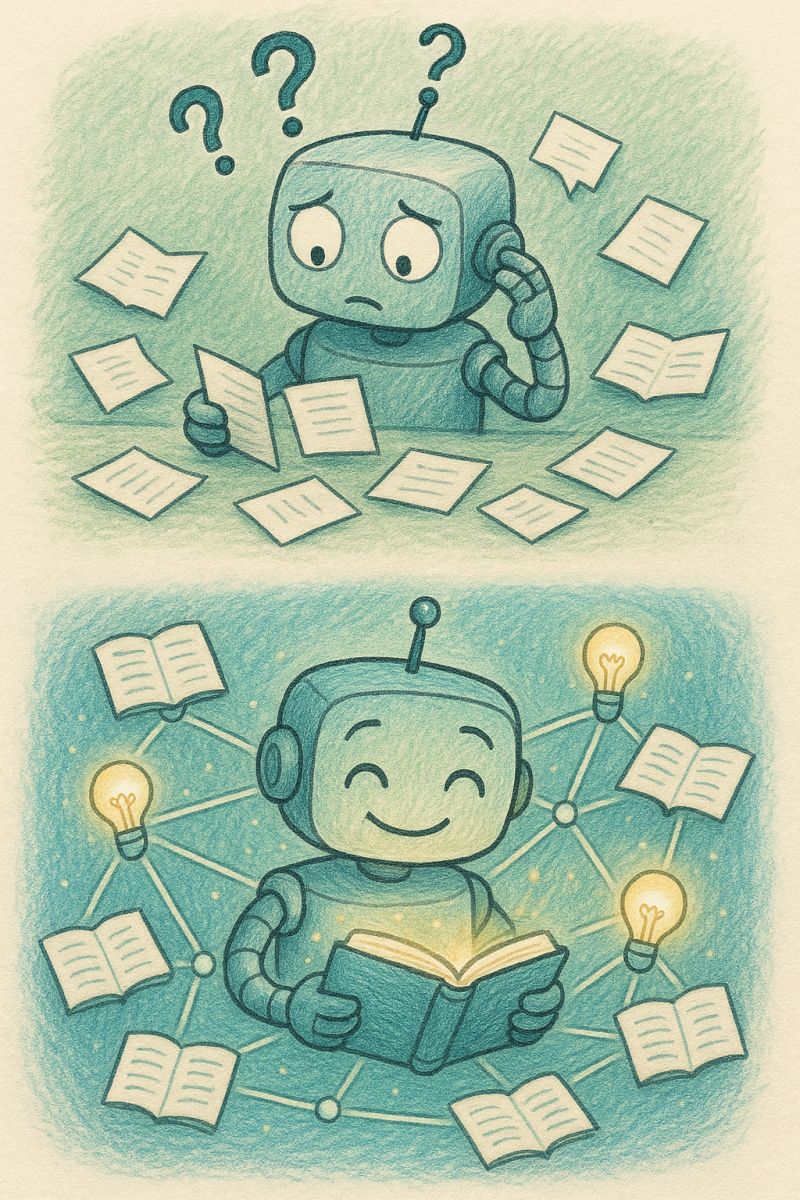

Most RAG systems take your documents and chop them into tiny, isolated chunks. Each chunk lives in its own bubble. When you ask a question, the system retrieves a handful of these fragments and expects the AI to make sense of them. The result is a disconnected, context-poor answer that often misses the bigger picture.

This is like trying to understand a novel by reading a few random sentences from different chapters. You might get a sense of the topic, but you will never grasp the full story or the relationships between ideas.

Real understanding requires more than just finding relevant information. It demands context and the ability to see how pieces of knowledge relate to each other. This is where standard RAG falls short. It treats knowledge as a stack of random pages, not as a coherent whole.

Time for a totally new approach.

Enter Graph RAG

Graph RAG changes the game. Instead of isolated chunks, it builds a rich network of interconnected knowledge. Think of it as a well-organised library, complete with cross-references and relationships. When you ask a question, Graph RAG does not just find relevant information. It understands how everything connects, delivering answers that are both accurate and deeply contextual.

This is not just theory. Research shows that Graph RAG systems can reduce token usage by 26% to 97% while delivering more accurate, contextual responses. The difference is not subtle. By understanding relationships, Graph RAG provides answers that make sense, not just answers that match keywords.1

Two Tools to Try

If you are ready to experiment with Graph RAG in practice, here are two tools worth your time. Both tools are open source and actively developed.

TrustGraph

TrustGraph is an open-source platform designed to make knowledge graph RAG practical and production-ready. It lets you build, ship, and manage knowledge graphs and vector embeddings as modular “knowledge cores”.

TrustGraph is fully containerised, so you can deploy it anywhere: local, cloud, or on-prem. Its TrustRAG engine automates the process of extracting entities and relationships from your data, building a graph, and then retrieving context-rich answers through a chat interface.

If you want to move beyond cobbling together vector databases and LLM APIs, TrustGraph gives you a unified stack to manage knowledge, control access, and deliver reliable, context-aware AI.

Mem0

Mem0.ai is a memory layer for AI agents that goes far beyond standard RAG. While it supports both vector and graph-based storage, the real breakthrough is in its graph memory. Instead of just storing facts as isolated chunks, Mem0 builds a dynamic knowledge graph from your data, automatically extracting entities and relationships from conversations or documents.

This graph structure enables your AI to retrieve not just relevant facts, but the connections between them. It can perform multi-hop reasoning, following chains of relationships to answer complex questions. The system maintains context and coherence across long conversations or sessions, while adapting and updating its memory as new information arrives, without losing the bigger picture.

Benchmarks show Mem0’s graph memory delivers up to 26% higher accuracy and 90% lower token usage than standard approaches, with much faster response times. If you want your agents to truly understand, remember, and reason, not just react, Mem0’s graph-based memory is a major step forward. Dive into the open source repo or the research paper for more.

If you are serious about getting more from your data, try them out and see how graph-based retrieval changes what your AI can do.

Which would be most useful? It depends on your use case. Choose TrustGraph if you need to build a solid foundation from a large collection of documents. Its architecture is built for bulk ingestion and creating a comprehensive knowledge base. Go with Mem0 if your system needs to learn and grow through ongoing conversations and interactions. Its graph-based memory excels at adapting to new information while maintaining context.

The future of AI is not about having more data. It is about understanding the relationships within your data. If you want your AI to deliver real value, you need to move beyond standard RAG and embrace approaches that prioritise context and connection.

For more on how this fits into the broader evolution of AI agents and knowledge management, see Building the Future.

How are you managing data retrieval within your AI system?

-

For a deeper dive into the research, see these papers: Comparative Analysis of Retrieval Systems in the Real World and GraphRAG: Graph-based Retrieval-Augmented Generation ↩

More articles

Vision for an AI-First Team Topology

Most discussions about AI focus on tools and techniques. But the real revolution is organisational. AI does not just change how we build software - it fundamentally transforms how we organise teams.

I am speaking at Fast Flow Conf UK 2025 about a vision for AI-first team topologies. Where stream-aligned teams become smaller yet more capable. Where platform teams evolve from infrastructure providers to creators of intelligent, malleable tools. Where the boundaries between disciplines blur as AI amplifies every team’s superpowers.

Read more →Beyond the Demo: Building LLM Applications That Actually Ship

Every team building with LLMs faces the same crushing moment. The demo works perfectly. Stakeholders are impressed. Then you deploy to production and watch it fall apart. What worked flawlessly with test data breaks in ways you never imagined. The carefully crafted system that seemed so promising becomes a source of constant firefighting.

I recently spoke at Google Cloud Summit with Gunisha Vig about how to build LLM applications that actually make it to production. Not proof of concepts. Not investor demos. Real systems serving real customers every day.

Read more →Kill Your Prompts: Build Agents That Actually Work

Every technical team I talk to faces the same painful truth about AI agents. They build something that works brilliantly in their demo, showing it off to stakeholders who nod approvingly. Then they deploy it to production and watch it break in ways they never imagined. The carefully crafted prompts they spent weeks perfecting become a maintenance nightmare.

Recently I showed a (virtual) room full of technical leaders how to kill their prompts entirely. I do not mean improve them or optimise them. I mean kill them completely and build something better.

Read more →Master Prompt Stacking: The Secret to Making AI Code Like You Do

After months of fighting with inconsistent AI coding results, I discovered something that changed how I work with tools like Cursor. The problem was not my prompts. The problem was that I had no idea what else was being fed into the AI alongside my requests.

During a recent webinar, I walked through this discovery with a group of engineers who were facing the same frustrations. What we uncovered was both obvious and surprising: AI coding tools are far more complex than they appear on the surface.

Read more →The Huge List of AI Tools: What's Actually Worth Using in June 2025?

There are way too many AI tools out there now. Every week brings another dozen “revolutionary” AI products promising to transform how you work. It’s overwhelming trying to figure out what’s actually useful versus what’s just hype.

So I’ve put together this major comparison of all the major AI tools as of June 2025. No fluff, no marketing speak - just a straightforward look at what each tool actually does and who it’s best for. Whether you’re looking for coding help, content creation, or just want to chat with an AI, this should help you cut through the noise and find what you need.

Read more →