The Challenge

Cherrypick had operated a basic meal generator since early 2023. Customers could select how many meals they wanted for the week, and the system would generate a plan. They could reject meals and ask for alternatives, but there was no way to specify preferences or understand the reasoning behind recipe selections.

Customer feedback was clear: they wanted more personalisation and explanations for why certain recipes were chosen. The existing system felt rigid and opaque, leading to frequent plan changes and lower engagement with the generated meal plans.

This presented a perfect opportunity to explore how LLMs could add genuine value beyond the typical chatbot implementations flooding the market.

The Solution

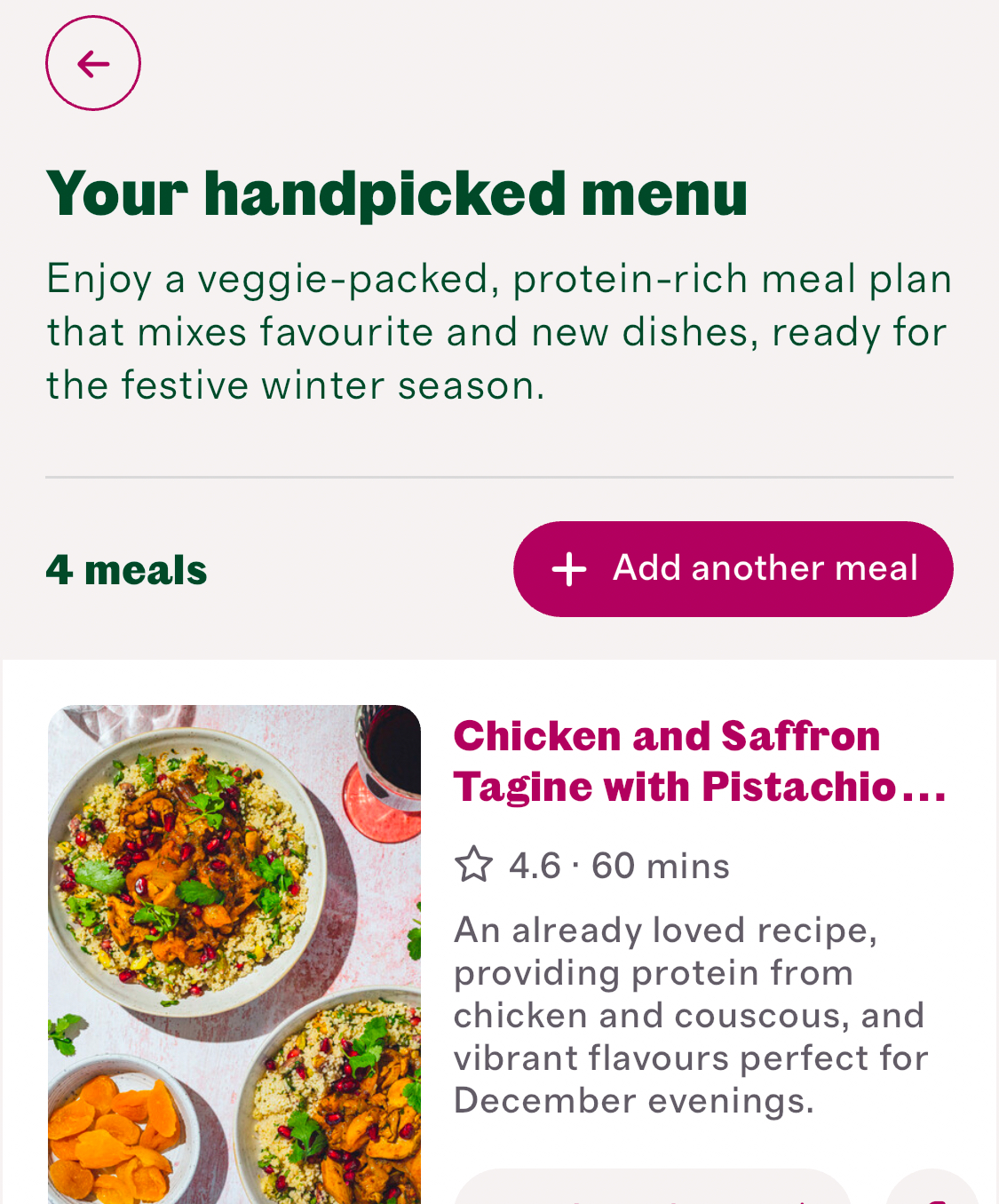

We built a production LLM system that creates truly personalised meal plans with natural language explanations. The breakthrough was recognising that this was not a chat problem but a completion problem.

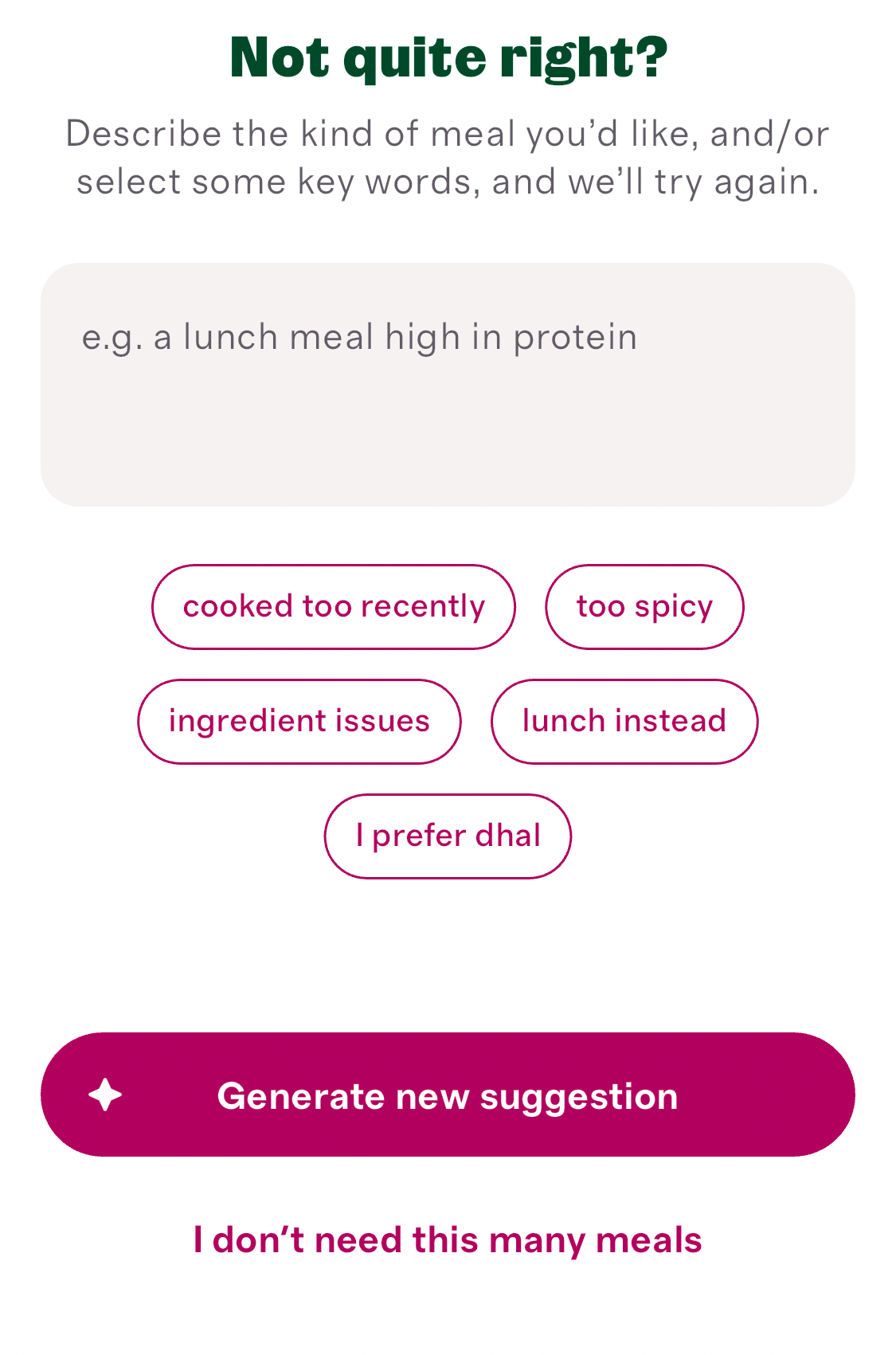

Instead of forcing customers into lengthy conversations, we designed an interface where meal plans generate with one click. Customers can then refine their plans using LLM-generated rejection options that feel customised and natural. This approach eliminated conversation fatigue while maintaining the personalisation benefits of LLMs.

The system excels at tasks that uniquely benefit from LLM capabilities: understanding dietary preferences, combining compatible recipes, and generating natural explanations for choices. These were problems that would have been difficult to solve effectively with traditional programming approaches.

Technical Implementation

The implementation required careful consideration of both technical and business constraints. We calculated costs upfront, understanding that consumer applications with large user bases and small margins cannot sustain expensive LLM interactions.

Our meal generator requires only a few LLM calls per plan generation, compared to the dozens of messages typical in chat sessions. This made the system financially viable where other approaches would have eroded profit margins entirely.

Quality control became paramount given the unpredictable nature of LLMs. We built a multi-layered evaluation system starting with automated validation of JSON structure and recipe ID verification against provided context. This prevented hallucinated recipes from reaching customers.

Expert review formed the second evaluation layer. Cherrypick’s Head of Food Sophie assesses generated plans for nutritional balance and flavour combinations, ensuring meals work well together throughout the week. These evaluations became training data for continuous improvement.

Context curation proved crucial for reliable results. Rather than sending the model our full recipe database and risking dangerous selections, we only provide recipes the customer can actually eat. This approach uses more tokens but delivers consistent, safe results.

Results

The system delivered measurable business impact that validated our approach. Customers began changing their meal plans 30% less frequently, indicating they were more satisfied with the initial recommendations. More importantly, basket usage of meal plans increased by 14%, demonstrating that customers found the personalised plans more actionable and appealing.

These improvements came from a production-ready system serving real customers daily. The multi-layered evaluation framework proved its worth during launch, catching quality issues before they reached users. Despite initial concerns about LLM costs, the system operates comfortably within budget constraints thanks to careful interface design.

Beyond the meal generator itself, we shipped major additional features including a health scores system while maintaining high delivery standards. The evaluation infrastructure we built became reusable for future AI features, creating lasting value beyond this single project.

"We built a production LLM meal generator that made our customers happier and increased revenue. Our AI system reduced customer plan changes by 30% (meaning happier customers) and increased basket usage by 14% (meaning more revenue per customer). We shipped major new features including health scores while maintaining high quality delivery."

Read full case study →Key Learnings

The success came from treating this as a product challenge first, then finding the right technical implementation. We learned that LLMs excel when applied to problems that genuinely benefit from their unique capabilities. Natural language explanations and dietary preference understanding were perfect fits, but we would never use LLMs for simple categorisation tasks.

Interface design proved more important than the underlying technology. Our guided approach with LLM-generated options delivered superior results compared to chatbot interfaces. Users received personalisation benefits without conversation fatigue, creating a sustainable interaction model.

Building evaluation frameworks from day one prevented quality disasters and enabled confident model comparisons. This upfront investment paid dividends during scaling, allowing us to iterate quickly while maintaining reliability.

Perhaps most importantly, we learned to work with LLM limitations rather than fight them. Careful context curation eliminated dangerous outputs while preserving the flexibility that makes these systems valuable. The key was designing constraints that enhanced reliability without sacrificing the core benefits.

The Impact

This case study demonstrates how to build LLM applications that actually work in production. Too many AI projects become investor demos rather than shipped products.

The key was treating this as a product challenge first, then finding the right technical implementation. The result was a system that improved customer experience while operating within business constraints.

Want similar results for your team? Chris can help you identify where LLMs add real value and build systems that work in production, not just in demos.

Ready to build production AI systems?

Get the same systematic approach that delivered these results. Fill in the form to get started:

More articles

The Huge List of AI Tools: What's Actually Worth Using in June 2025?

There are way too many AI tools out there now. Every week brings another dozen “revolutionary” AI products promising to transform how you work. It’s overwhelming trying to figure out what’s actually useful versus what’s just hype.

So I’ve put together this major comparison of all the major AI tools as of June 2025. No fluff, no marketing speak - just a straightforward look at what each tool actually does and who it’s best for. Whether you’re looking for coding help, content creation, or just want to chat with an AI, this should help you cut through the noise and find what you need.

Read more →Unlocking Real Leverage with AI Delegation

Starting to delegate to AI feels awkward. It is a lot like hiring your first contractor: you know there is leverage on the other side, but the first steps are messy and uncertain. The myth of the perfect plan holds many people back, but the reality is you just need to begin.

The payoff is real, but the start is always a little rough.

Here is how I do it.

Read more →How I Make Complex AI Changes

Most technical leaders know the pain. You get partway into an ambitious AI project, then hit a wall. You are not sure how to start, or you get so far and then stall out, lost in the noise of options and half-finished experiments.

Recently I tackled this head on. I did this live, in front of an audience. I used a framework that finally made the difference.

The challenge: could I take a complex change, break it down, and actually finish it, live on stream? My answer: yes, with the right approach. Here is exactly how I did it.

Read more →Building AI Cheatsheet Generator Live: Lessons from a Four-Hour Stream

I built an entire AI-powered app live, in front of an audience, in just four hours. Did I finish it? Not quite. Did I learn a huge amount? Absolutely. Here is what happened, what I learned, and why I will do it again.

The challenge was simple: could I build and launch a working AI cheatsheet generator, live on stream, using AI first coding and Kaijo1 as my main tool?

Answer: almost! By the end of the session, the app could create editable AI cheatsheets, but it was not yet deployed. A few minutes of post-stream fixes later, it was live for everyone to try. (Next time, I will check deployment on every commit!)

Try the app here: aicheatsheetgenerator.com

AI: The New Dawn of Software Craft

AI is not the death knell for the software crafting movement. With the right architectural constraints, it might just be the catalyst for its rebirth.

The idea that AI could enable a new era of software quality and pride in craft is not as far-fetched as it sounds. I have seen the debate shift from fear of replacement to excitement about new possibilities. The industry is at a crossroads, and the choices we make now will define the next generation of software.

But there is a real danger: most AI coding assistants today do not embody the best practices of our craft. They generate code at speed, but almost never write tests unless explicitly told to. This is not a minor oversight. It is a fundamental flaw that risks undermining the very quality and maintainability we seek. If we do not demand better, we risk letting AI amplify our worst habits rather than our best.

This is the moment to ask whether AI will force us to rediscover what software crafting1 truly means in the AI age.

-

I use the term “software craft” to refer to the software craftsmanship movement that emerged from the Agile Manifesto and was formalised in the Software Craftsmanship Manifesto of 2009. The movement emphasises well-crafted software, steady value delivery, professional community, and productive partnerships. I prefer the terms “crafting” and “craft” to avoid gender assumptions. ↩